Geek alert. It's not often that I go technical in a blog, but today's blog topic is spurred by my recent attendance at Airbnb's OpenAir 2016 Tech Conference. So you've been warned.

Bias complaints from customers at Airbnb was the hot topic at OpenAir2016, which was held June 8, 2016 in San Francisco. Recent media attention on race problems within Airbnb by USA Today and by BuzzFeed are igniting Airbnb. A second focus of the conference was trust & safety. So how does diversity, trust & safety and machine learning relate to each other? Hang in with me and we'll get there.

At the conference, Airbnb choose to highlight work discrimination concerns with a diversity-focused panel featuring Ellen Pao. If you don't know her, she sued her former employer, the venture capital firm Kleiner Perkins, for discrimination. I missed this panel discussion because I was geeking out with machine learning (see below). I'm sure there was a heated discussion of how firms can bring equality to the workplace - and aren't doing it well. A separate panel on bias in recruiting and hiring highlighted Stephanie Lampkin @stephaneurial of Blendoor, an MIT geek. Blendoor offers an app that facilitates job matching based on merit, not on the looks of an individual. Other presentations were 'inequality in the 21st Century' by Dr. Hedy Lee, Professor of Sociology at University of Washington and 'gender neutrality' by Monique Morrow, CTO at Cisco. These are stories/calls for actions I've been hearing for 25+ years so it's frightening that it's still a topic of conversation. Sigh.

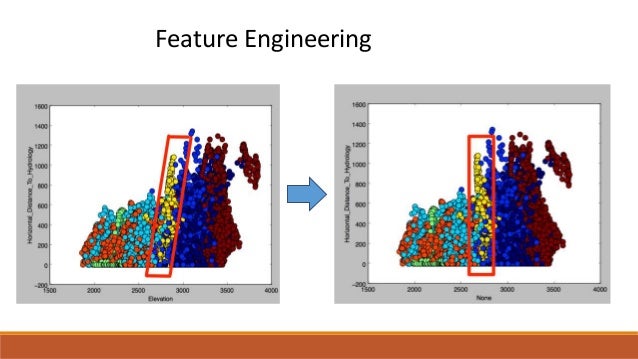

As a Hispanic woman in technology, these areas of discrimination are always a concern for me. I lived it during the early 80's as an undergraduate engineering student in California. Because this seems 'old hat' to me, I by passed some of the diversity talks instead to focus on my recent interest, machine learning. At OpenAir, I attended the extremely interesting presentation on 'Scaling the Human Touch with Machine Learning' by Avneesh Saluja and Ariana Radianto of Airbnb. This was a presentation for machine learning geeks. There were two important take-aways for me. First was the recommendation to really understand feature engineering (this excellent article by Jason Brownlee provides an overview of it). Why is feature engineering so important? It helps the data scientist more easily interpret the results. This means it's easier to explain to non-data scientists. That's important when making real world decisions.

Graphic from: http://www.slideshare.net/ChandanaTL/feature-engineering-on-forest-cover-type-data-with-decision-trees

The second take-away was the use of learning dense word embedding in natural language processing. Because feature engineering can be so time consuming, this is another way of understanding the data at hand. Dr. Saluja provided insights into modeling of ticket routing using this method of embedded learning. I don't claim to be an expert in either feature engineering or word embedding but I definitely understand the need to better know how to interpret your data.

On a less geeky nature, other talks at OpenAir focused on trust. One included a panel on measuring trust at Airbnb that was kicked off by Joe Gebbia, co-founder of the company, who reported on the findings of two professors from Stanford. They found that (1) homophily, which is the tendency of individuals to associate and bond with similar others (also called birds of a feather flock together), has a significant role in whether a host will invite a guest into their home and (2) that the bias of homophily can be counteracted with a reputation system. Those standard star ratings that are used in peer-2-peer networks such as Airbnb and ebay help reduce discrimination. The Stanford profs found that if a person has 10 or more reviews and has a 5/5 star rating, they are more likely to be trusted as an investment opportunity. The study was based on a decision to invest in, rather than a willingness to host. Frankly, I'm not sure why they had to mask the reason for selecting an individual. Is investing the same thing as hosting? I'd think not. Nonetheless, the study provided some insights on the impact of a star reputation score to reduce the bias from homophily. Speaking of star reputation scoring, Airbnb's method of reporting on hosts' ratings but not guests ratings has always perplexed me. Why does Airbnb breakdown host ratings into accuracy, communication, cleanliness, location, check-in and value, but there is no breakdown on guest ratings? Would reporting both host and guests category star ratings help with discrimination? I'm not sure, but it's worth thinking about.

A trust & safety focus should mean thorough vetting of members to the network. A person can hide underneath a 'star rating' system, but it's hard to hide under social media. So in my own research I try to understand how social media paints a picture of an individual. How a person presents themselves in social media should give a story about that person. And since homophily extends to those in your social network, this could be an important part of the story. So with a bit of data mining and network evaluation, it may be possible to determine if someone is a racist, or maybe just uncomfortable around those dissimilar from themselves. Is diversity of the people in an individual's social network a good indication of how open they are? I would think so.

Are the better hosts those with more diverse networks or are the better hosts those that practice homophily? A little machine learning can help determine who makes a good match and why. The problem of discrimination in peer-2-peer networks is not an easy one to fix. I just think it's really cool that machine learning may be a way of finding a solution.

Enter your message and we'll get in touch with you.

Or you can give us a call...

What is your business?

Jury ConsultantIf you choose to block all sharing of your Vijilent data, please enter your request here.

Enter your message and we'll get in touch with you soon!